V Model in Agile

[This blog was inspired by a question in Quora]

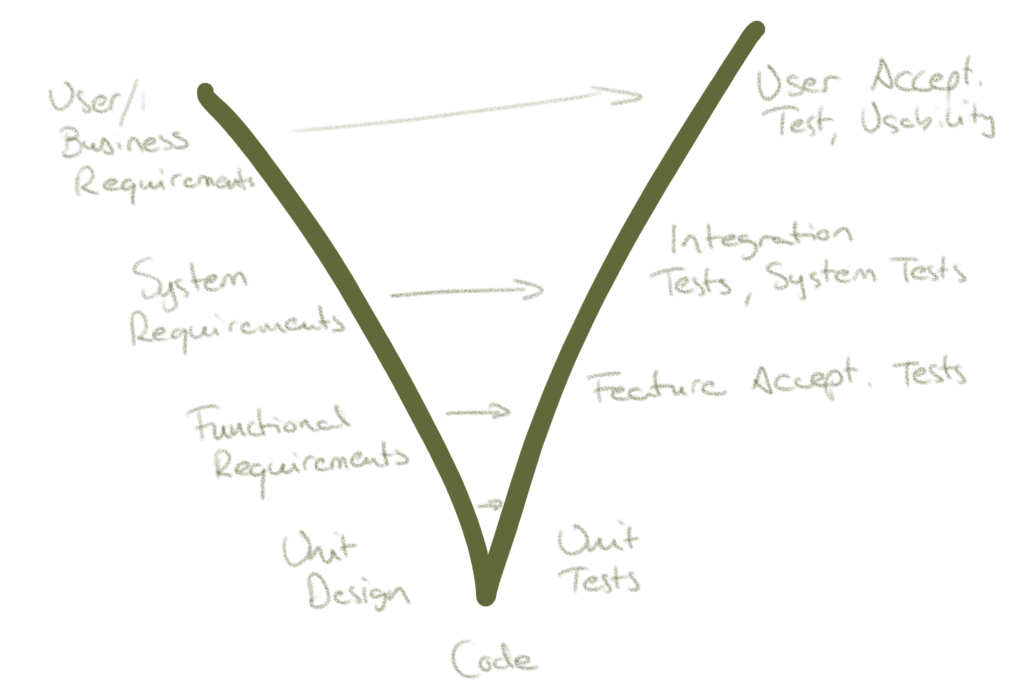

V model is a conceptual model between design/requirements/needs and confirmation of those needs in the system. In waterfall, it is interpreted, unfortunately, as a staged model, where each stage is completed before the next step. For example, we want to consider user needs and business requirements as a whole before going into smaller details. On the other side of the V we have the verification/validation steps to confirm that the system meets the identified capabilities. First we go down the left arm of the V, and then climb back up the right arm.

This is a logical model – think of the bigger picture before smaller details, and consider how to confirm the system works accordingly at the different stages. But in the way the waterfall approach uses it, there is a huuuuge assumption behind the staged model – that the identified requirements at each level DO NOT CHANGE during the deeper V stages. A lot of people using waterfall approaches do not seem to understand how critical that assumption is, and how huge the impact is to the successful use of the model.

In an Agile contexts, we are very aware that requirements do change over time and as we learn more about them. Therefore we do not want to commit to an unchanging set of requirements at any point, except for a very small step when we implement a very narrow capability. The V model, in this context, is not a stage model, but more a reminder that our system will need different levels of verification and validation (verification checks that the system works as we’ve designed it, and validates check if it’s actually the right thing to do and works in the business context). For every small increment, we will need to consider all applicable levels.

There are, therefore, unit level tests that ensure that the small unit level components behave as intended. Those tests are run after every small code level modification, as part of the development flow. These code level tests also confirm that our modification has not changed the behaviors of the units around the modified code.

There are feature/system level tests that confirm that the feature behaves as intended. These tests are done usually in isolation, i.e. the system is separated from external dependencies, so that we can control the test data and tested operations. These tests specifically test that our product works as we’ve designed it. These tests may also test things like performance, load, error handling, security, etc.

There should be integration tests that confirm that our system works in concert with its interfaced systems. These tests are dependent on the operation of the external systems, but if our feature level tests pass and have been well designed, any failure at this level should indicate a requirements/behavioral incompatibility between the systems or a failure in an external system. In large software ecosystems, there are probably multiple levels of integration tests. These tests may also check how e.g. failure conditions are handled between the systems.

Many advanced teams also test the deployment of the product, even to the point that if the deployment succeeds, the new version is taken directly to use.

Lastly, we should have tests that validate that our product actually does meet the users’ and business needs. While all the earlier levels of testing should be fully or largely automated, this level if often manual, because it relies on human judgment on the rightness of the built capabilities.

In Agile, these different levels are considered all the time and simultaneously as we gradually extend the capabilities of the system. As a lower level test set passes, it triggers the next level, and so on. Every little capability addition may extend any and all of the levels, as needed. There is always a test harness that exactly matches the product itself, so that every modification can be fully tested according to its specific features.

Note, the terminologies used in various versions of the V model may differ. But whatever the naming, the basic idea is the same. Some people seem to also use terms “verification” and “validation” slightly differently than I do.

EDIT: Antti Kaltiainen commented on this in LinkedIn:

There are different definitions for software validation, nevertheless your post is somewhat aligned with the one used in medtech. (using IEC 62304 SW lifecycle standard and it’s V-model) I agree with most of the concepts in your post. Still it is very burdensome to complete the validation in many cases – particularly in medical. As it requires on-site validation with more or less real end-users. Verification have to be also need to be fully complete and does not make sense for all cases which include manual testing. Roughly – my proposal is to run V-model “right-hand side” up until verification of ~80% per sprint and go for full verification and validation only on release at the end (and for notified body or FDA submission). This is about optimization of batch size(and content) – good learning from Henri Hämäläinen from back in the days

Yes, the higher up we go in the V-model, the harder it may be to do it all within a Sprint. I’m “willing to conceed on that” :D. There are similar cases when talking e.g. about security testing.